Mixing and Matching Standards to Ease AR Integration within Factories

AREA member Bill Bernstein of the National Institute of Standards and Technology (NIST) shares his organization’s early work to improve AR interoperability.

Today, most industrial Augmented Reality (AR) implementations are based on prototypes built in testbeds designed to determine if some AR components are sufficiently mature to solve real world challenges. Since manufacturing is a mature industry, there are widely accepted principles and best practices. In the real world, however, companies “grow” their factories organically. There’s a vast mixing and matching of domain-specific models (e.g., machining performance models, digital solid models, and user manuals) tightly coupled with domain-agnostic interfaces (e.g., rendering modules, presentation modalities, and, in a few cases, AR engines).

As a result, after organizations have spent years developing their own one-off installations, integrating AR for visualizing these models is still largely a pipedream. Using standards could ease the challenges of integration, but experience with tying them all together in a practical solution is severely lacking.

To address the needs of engineers facing an array of different technologies under one roof, standards development organizations, such as the Institute of Electrical and Electronics Engineers (IEEE), the Open Geospatial Consortium (OGC), and the Khronos Group, have proposed standard representations, modules, and languages. Since the experts of one standards development organization (SDO) are often isolated from the experts in another domain or SDO when developing their specifications, the results are not easily implemented in the real world where there is a mixture of pre-existing and new standards. The problem of low or poor communications between SDOs during standard development is especially true for domain-agnostic groups (e.g., the World Wide Web Consortium (W3C) and Khronos Group) communicating with domain-heavy groups (e.g., The American Society of Mechanical Engineers, the MTConnect Institute, and the Open Platform Communications (OPC) Foundation).

However, both perspectives – domain-specific thinking (e.g., for manufacturing or field maintenance) and AR-specific and domain-agnostic concerns (e.g., real-world capture, tracking, or scene rendering) – are vital for successfully introducing and producing long term value from AR.

Smart Manufacturing Environments

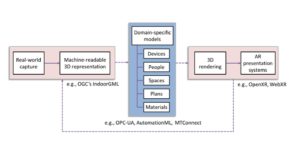

In the case of smart manufacturing systems (SMS), SMS-specific standards (e.g., MTConnect and OPC-Unified Architecture) provide the necessary semantic and syntactic descriptions of concepts, such as information about devices, people, and materials. Figure 1 showcases the current state of an industrial AR prototype with examples of standards to inform processes.

Figure 1: General workflow for generating industrial AR prototypes. The dotted purple lines signify flows that are currently achieved through significant human labor and expertise.

From a high-level view, the AR community is focused on two separate efforts:

- Digitizing real-world information (shown on the left of Figure 1);

- Rendering and presenting AR scenes to the appropriate visualization modalities (shown on the right of Figure 1).

To produce successful and meaningful AR experiences, it is vital to connect to domain–specific models with domain-neutral technologies. In the current state of AR development where few or no standards have been implemented by vendors, this task is expert-driven and requires many iterations, human hours, and experience. There are significant opportunities for improvement if these transformations (indicated by the purple dashed lines in Fig. 1) could be automated.

In the Product Lifecyle Data Exploration and Visualization (PLDEV) project at NIST, we are experimenting with the idea of leveraging standards developed in the two separate worlds: geospatial and smart manufacturing or industry 4.0. One project, shown in Figure 2, integrates both IndoorGML, a standard to support indoor navigation, and CityGML, a much more detailed and expressive standard that can be used for contextually describing objects in buildings, with MTConnect, a standard that semantically defines manufacturing technologies, such as machine tools. All these standards have broad support in their separate communities. Seemingly every day, supporting tools that interface directly with these representations are pushed to public repositories.

Figure 2: One instance of combining disparate standards for quick AR prototype deployment for situational awareness and indoor navigation in smart manufacturing systems.

In Figure 2, we show the use of IndoorGML and CityGML in a machine shop that has previously been digitalized according to the MTConnect standard. In doing so, we leverage existing AR visualization tools to render the scene. We then connect to the streaming data from the shop to indicate whether a machine is available (green), unavailable (yellow), or in-use (red). Though this is a simple example, it showcases that when standards are appropriately implemented and deployed, developers can acquire capabilities “for free.” In other words, we can leverage domain-specific and -agnostic tools that are already built to support existing standards, helping realize a more interoperable AR prototyping workflow.

Future Research Directions

This project has also demonstrated significant future research opportunities in sensor fusion for more precise geospatial alignment between the digital and real worlds. One example is leveraging onboard sensors from automated guided vehicles (AGVs) and more contextually defined, static geospatial models described using OGC standards IndoorGML and CityGML.

Moving forward, we will focus on enhancing geospatial representations with additional context. For example, (1) leveraging such context for AGVs to treat task-specific obstacles (like worktables) differently than disruptive ones (like walls and columns) and (2) helping avoid safety hazards for human operators equipped with wearables by more intelligent rendering of digital objects. We are currently collaborating with the Measurement Science for Manufacturing Robotics program at NIST to investigate these ideas.

If successfully integrated, we will be able to demonstrate what we encourage others to practice: adoption of standards for faster and lower cost integrations as well as safer equipment installations and factory environments. Stay tuned for the next episode in this mashup of standards!

Disclaimer

No endorsement of any commercial product by NIST is intended. Commercial materials are identified in this report to facilitate better understanding. Such identification does not imply endorsement by NIST nor does it imply the materials identified are necessarily the best for the purpose.