Mission Control Lab Uses AR to Reveal Connections and Drive “Inventure”

One of the great obstacles to helping people of all ages develop an interest in science, technology, and invention, according to Jessica Cobb, founder and CEO of Mission Control Lab, is that so much about technology is hidden from view.

“At Mission Control Lab, we want to reveal embedded cyber-physical systems,” said Jessica in an interview from her base in the Netherlands. “Because you can’t see the workings behind technology, it’s frustrating and limiting and decreases connectivity.”

Jessica, who describes herself as an “emerging technologist, founder, maker, inventor, digital unicorn, and serial entrepreneur,” has devoted her professional life to revealing what’s hidden, bringing forth the fascinating connections between electronic components to engage learners in pursuing what she calls “inventure™” (“invention plus adventure”). She has turned to Augmented Reality (AR) to assist in that process.

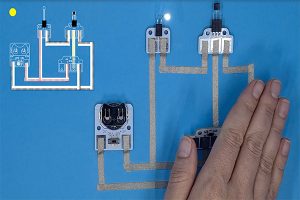

“We’re using AR in our products to reveal the webbing between networks in our systems design exercises,” Jessica explained. “One of our products is kind of like electronic Legos with light, movement, and sound. With AR, you can see those electronic Lego parts being appliquéd onto different surfaces and link those connections back to a particular application.”

The products Jessica refers to are sold in MakeON® kits. Go here or here to see them in action.

For the AR ecosystem, the work of Mission Control Lab is not only a clever way to use AR to bring concepts to life; it’s also a way to build a diverse and inclusive global community of innovators who appreciate the value of AR. That can only benefit the adoption of AR as more AR-experienced people enter the workplace.

“At Mission Control Lab, we make this connection between individuals, industry, and education,” she explained. “This gives us a better sense of what’s going on in terms of needs for the workforce, as well as what’s meaningful for people, enabling us to create a relationship with the future of work now. That’s why the AR piece of it is so important; it’s engaging interest, meaning, and intrigue.”

For Jessica, the goal isn’t simply to show people how to build stuff.

“It’s not the end invention where the real social impact and change occurs,” she said. “It’s in the space between adventure and invention. If we are cultivating that space cooperatively, a lot of the challenges we’re facing in education and workforce development just dissolve. That’s what Mission Control Lab is about. Our products MakeON® and Inventure embody the storytelling around identity, the individual, and emerging technology.”

With the newly-launched Inventure initiative (discover more here), Mission Control Lab is seeking to engage directly with enterprise partners to build what Jessica calls “future fitness pathways.” Jessica’s vision is to create an online space where people and organizations can collaborate and access cutting-edge media content.

“With Inventure, an 11-year-old in France is going to be able to talk about some new tech and apply it in the Inventure space with an 11-year-old in Indonesia. It’s kind of a mixture of LinkedIn, Instagram, and Hunger Games,” she laughed.

Jessica is also actively planning to use AR technology in a way similar to Ikea Kreativ, the iOS-based AR tool that enables customers of the Swedish furniture company to capture their spaces in 3D and decorate them.

“We want you to be able to make wearables, walls, and objects come alive with AR, and then replicate that in real life,” said Jessica. That experience will likely hit the market within the next quarter.

AREA members and the greater AR ecosystem can support the work of Mission Control Lab by exploring partnerships and sponsoring opportunities. For more information, please contact Jessica Cobb.