This editorial has been developed as part of the AREA Thought Leaders Network content, in collaboration with selected AREA members.

Short of time? Listen to the accompanying podcast (~10 minutes) available here.

An imperative to overcome limitations

The COVID-19 pandemic has unleashed an unprecedented impact across the global business landscape. Over recent months, many countries have implemented various forms of lockdown, severely limiting the ways that companies can do business, and, in many cases causing operations to cease. This crisis is likely to have an ongoing impact in the months ahead as we transition to a “new normal” and beyond.

This editorial discusses ways in which Augmented Reality (AR) can help mitigate the societal and business impact while supporting business continuity through the pandemic.

The restrictions placed upon both individuals and organizations have resulted in an upsurge in the use of digital technologies to support a variety of activities, including online shopping, digital and contactless payments, remote working, online education, telehealth, and entertainment. The ability to support these activities is heavily reliant upon the availability of “digital-ready” infrastructure and services.

Enterprise AR builds upon this digital infrastructure by offering the ability to juxtapose digital content over a live view of the physical world to support business processes. So how can AR help?

First, let’s examine the impacts that COVID-19 and subsequent responses have had upon business and society:

- Social distancing measures hinder our ability to have traditional face-to-face interactions in addition to often limiting the size of groups able to gather.

- The inability to travel and prevalence of key staff working from home are viewed as impacting the ability to conduct business, manage effective team operations, and provide local expertise where it is needed, amongst others.

- Fewer on-site staff due to illness, self-isolation and financial restrictions impedes an organization’s ability to continue operations “as before.”

- A lack of classroom and hands-on training makes it difficult to quickly upskill new staff or train existing staff on products and processes.

- Disrupted supply chains are requiring manufacturing and sourcing processes to become more flexible to help ensure continuity of production.

- The potential for virus transmission has caused a reluctance among workers to touch surfaces and objects that may have been touched by others.

Clearly, to help address these challenges, new or enhanced tools and ways of working are required. At the AREA, we believe that AR can play an effective role in mitigating a number of these obstacles and, at the same time, offering new opportunities to provide long-term business improvements.

AR can help address COVID-19 restrictions with remote assistance

A key use case of Enterprise AR is in the realm of remote assistance. AR-enhanced remote assistance provides a live video-sharing experience between two or more people. This differs from traditional videoconferencing in that such tools use computer vision technology to “track” the movements of the device’s camera across the scene. This enables the participants to add annotations (such as redlining or other simple graphics) that “stick” onto elements in the scene and therefore remain in the same place in the physical world as viewed by the users. Such applications support highly effective collaboration between, for example, a person attending a faulty machine and a remote expert, who may be working from home. This use case helps mitigate impacts of travel reduction, reduced staffing, and, of course, social distancing.

|

|

AR-enhanced remote assistance for medical equipment procedures (YouTube movie). Image and movie courtesy of RE’FLEKT.

|

Sarah Reynolds, Vice President of Marketing, PTC comments, “As organizations look to maintain business continuity in this new normal, they are embracing AR to address travel restrictions, social distancing measures, and other challenges impacting their front-line workers’ ability to go on-site and operate, maintain, and repair machines of all kinds. Even when equipment or product experts can’t address technical issues in person, AR-enhanced remote assistance enables them to connect with on-site employees and even end customers to offer them contextualized information and expert guidance, helping them resolve these issues quickly and ultimately reduce downtime. AR-enabled remote assistance marries the physical and the digital worlds – allowing experts and front-line workers to digitally annotate the physical world around them to improve the clarity, precision, and accuracy of their communication and collaboration.”

|

AR-enhanced remote assistance enables business continuity for machine operations, servicing and repair. Image courtesy of PTC.

|

AR enables no-touch product interaction via virtual interfaces

A key capability of AR is the ability to superimpose a digital virtual user interface on physical equipment that may have a limited or non-existent user interface. The user is able to, depending upon the technology used, select actions by tapping on the screen of the device or, alternatively, use hand gestures or verbal commands to interact with the equipment via the AR-rendered “proxy” user interface. The provision of such abstracted interactions is key to reducing the amount of touching required by physical objects that may be used by numerous people.

There are many ways in which such AR capabilities can help medical professionals carry out their duties during the current pandemic and beyond. The BBC has reported on one such application that helps reduce the amount of physical contact between doctor and patient, while still enabling them to communicate with colleagues outside of the COVID-19 treatment area. Here, a doctor wearing a Mixed Reality headset is able to interact with medical content such as x-rays, scans or test results using hand gestures while others are able to participate in the consultation from a safe location. The article points out that this way of working also reduces the need for Personal Protective Equipment (PPE) as colleagues are able to participate from a safe distance.

|

Example of a virtual user interface projected into the physical world. Image courtesy of Augumenta.

|

|

Eve Lindroth, Marketing and Communications at Augumenta, comments, “Today, the devices and applications can be controlled hands-free. This also addresses the problem of being able to work hygienically. You do not need to touch anything to get data in front of your eyes, control processes, or to document things. You can simply use gestures or voice to tell the device what to do. Tap air, not a keyboard.”

AR can help medical equipment training

AR can also be used to help assist medical professionals by providing highly efficient and interactive training methods that can streamline the process of learning new equipment and other necessary procedures. This is critical when experienced staff are unwell and replacements need to be trained as quickly as possible.

Harry Hulme, Marketing and Communications Manager at RE’FLEKT, comments, “We’re seeing that AR is a key tool for healthcare workers during these testing times. For medical training and equipment changeovers, AR solutions substantially reduce the risk of human error while significantly reducing training and onboarding times. Moreover, the time-critical process of equipment changeover is accelerated with AR-enhanced methods.”

|

|

AR-based training with REFLEKT ONE and Microsoft HoloLens in medical and healthcare. Image courtesy of RE’FLEKT.

|

AR supports remote collaboration

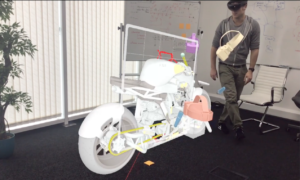

The remote assistance use case can be generalized further to include remote collaboration. AR enables users who are physically separated to be able to “inhabit” a shared virtual space, distributed by the AR application. This ability enables the support of numerous use cases, including shared design reviews. In this scenario, multiple users can see the 3D product models and supporting information projected onto their view (and from their relative position) of the physical world, via their AR-enabled devices.

Different design alternatives can be presented and viewed in real-time by all participants, each of whom can position themselves in their physical space to obtain a particular aspect of the digital rendition. Further, users can annotate and redline the shared environment, providing immediate visual feedback to all others. Such capabilities are key factors in mitigating the restrictions imposed upon travel, the forming of groups and close-proximity human-to-human interaction.

|

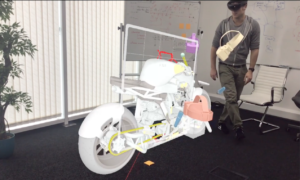

|

Immersive collaboration: A design review of a motorbike in 1:1 scale with a remote team. Image courtesy of Masters of Pie.

|

Karl Maddix, CEO of Masters of Pie, comments: “Video conferencing solves the immediate need to bring people together whereas collaboration, as enabled by Masters of Pie, is built for industry to bring both people and 3D data together. Real-time access to the underlying 3D data is imperative for effective collaboration and business continuity purposes.”

AR supports remote sales activities

AR is also proving an effective sales tool, enabling the all-important sales process to continue during the pandemic. Home shoppers can examine digital renditions of home appliances, furniture, etc. presented within their own physical space, for example. Moreover, the use of rich and interactive sales demonstrations facilitated by AR allow the potential buyer to understand the form, fit and function of a product without the need for travel, touch or close interaction with a salesperson.

|

AR enriches the remote shopping experience, allowing buyers to place and interact with products in their own physical environment. Image courtesy of PTC.

|

Sarah Reynolds of PTC comments, “AR experiences improve the end-to-end customer experience, improve purchase confidence, and ultimately streamline sales cycles, especially when customers are not able to shop in person.”

Take the next steps

In this editorial we’ve discussed a number of ways in which AR technology can help ensure business and healthcare continuity by mitigating the impacts of the various restrictions placed on the way we work. Recognizing this, many AREA member companies have introduced special offers and services to help industry during the pandemic and we applaud their support. Learn more about them here.

We invite you to discover more about how Enterprise AR is helping industry improve its business processes at The AREA.