Huawei’s Farhad Patel on Taking AR Beyond the Pilot

Since its founding 31 years ago, Huawei has grown to become the largest telecommunications equipment manufacturer in the world and the world’s second largest smartphone manufacturer. With $75 billion in revenue, the global giant supports R&D operations in 21 countries around the world. After Huawei recently rejoined the AREA, we had a chance to talk with Farhad Patel, Technical Communication Manager based in Plano, Texas.

AREA: What has driven Huawei’s interest in augmented reality to date?

FARHAD PATEL: I work in the Innovation and Best Practices group in Huawei’s technical communication department. We’re responsible for developing and delivering the technical information and communication that goes out to our customers in the form of user guides, technical manuals, and instructional guides. A couple of years ago, as part of our innovation objectives, Rhonda Truitt, Director of Huawei’s Innovation and Best practices in Technical Communication group decided to research and pilot AR because she thought it could be very useful for information delivery. During our research we discovered that quite a few AR experiences involved showing someone how to perform a task and that’s what technical communicators do. We knew that technical communicators were the best people to deliver AR content and saw this as hugely beneficial to our audience. The thing that really appealed to us was that equipment could be automatically identified and content could be automatically displayed without searching. Contextually, this is what a person is looking at, and this is what he or she probably needs. So, it would save our customer’s time not to have to search and locate information.

AREA: Are your customers consumers or businesses?

FARHAD PATEL: Both. Huawei develops a full range of telecommunications equipment: switches, routers, servers, software, and the cell phone itself. We sell to telecommunications providers as well as consumers.

AREA: How would you describe the state of Huawei’s internal adoption of AR today?

FARHAD PATEL: I can’t speak for the rest of Huawei but in the documentation area we have done several very small, very targeted AR projects both in the US and in China. It’s not something that we’re doing corporate-wide for documentation, and it’s not something that we’re even doing product line-wide. So, if there is a certain product, like a power converter or a server, that we want to publicize to our customers, we may turn to AR or use AR as one of the channels to show its features and what it can do. We’ve also used AR at tradeshows but only in terms of certain products. So, we have done multiple small projects, but we haven’t scaled up to include a full product line since not all content is suitable for AR delivery.

AREA: Do you have a timetable in place for more widespread deployment?

FARHAD PATEL: It’s probably not on the near horizon because AR still has significant challenges that need to be addressed. And one of them is not even related to AR but more the content itself: how do we minimize the content to fit onto the smaller screen of a tablet or smartphone? And we have long procedures, filled with images and tables. That is a challenge. Another challenge is that the tracking and recognition technology is still not where it could be. And ideally, with AR, you could work hands-free. Just put on your glasses and you’d be able to see work instructions superimposed right next to the equipment. We’re still waiting for smart glasses technology to improve before large-scale adoption. So, those are the challenges that we’re struggling with as we try to ramp up the adoption of AR.

AREA: Among those eight pilots was a field technician installation instruction application with HyperIndustry, correct?

FARHAD PATEL: Yes, we’ve done three or four pilots with Inglobe Technologies. The other company that we’ve used is 3D Studio Blomberg, an AREA member. And we have developed AR experiences internally in China. We have also used another AR company, EasyAR.

AREA: What have you learned from the AR pilots that you’ve conducted so far?

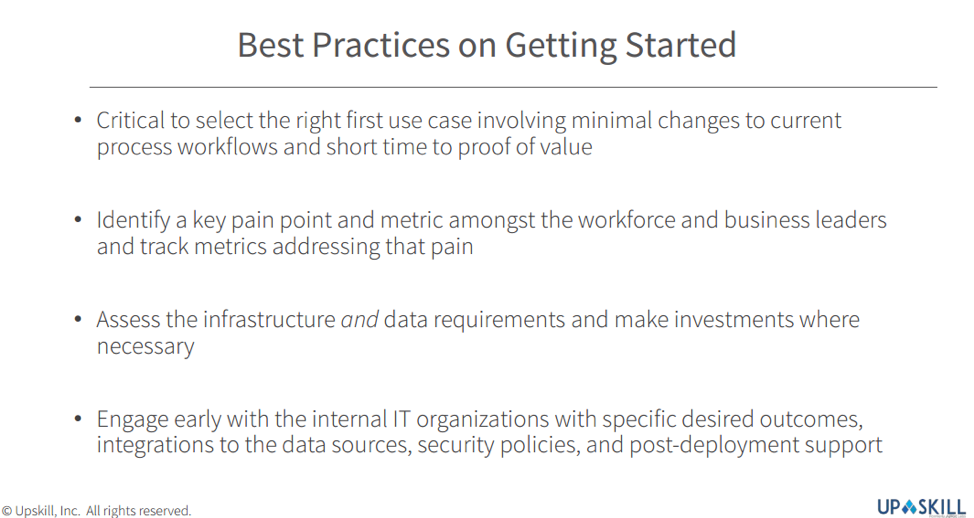

FARHAD PATEL: We’ve improved our knowledge about AR technology – what it can do, what it can’t do, its limitations, its challenges. We’ve come a long way from where we started out. We have also increased the awareness of AR technology and expertise within the company itself. Now, we have many more product lines aware of AR technology and how powerful and successful it can be, because we’ve had a few customer pilots and we’ve demonstrated AR in these pilots, and the user groups and customers have been very appreciative of this new delivery channel for information. But at the same time, we’ve also identified what we cannot do with AR. So, we’re in a wait-and-see mode to see how best to proceed to take AR enabled information corporate-wide or even product line-wide for appropriate content.

AREA: How are you hoping to benefit from your membership in the AREA?

FARHAD PATEL: The main thing we want to get from the AREA is knowledge. We’re hoping to be able to share what we have learned. And I hope that other AREA members will reciprocate and tell us what they have learned. I would hope to learn more about their best practices, their challenges, what works for them, what doesn’t work for them. For example, if an AREA member has figured out how to minimize the content so that it can be visible on smaller screens, I’m hoping that they share that information.

Networking is another benefit. You know, we learned about 3D Studio Blomberg from the AREA, so getting in touch with other like-minded people to work with their technology. And of course, the best practices, white papers, and webinars that the AREA puts out. For example, recently the AREA developed an ROI calculator. Useful information like that will go a long way in validating our membership fees to the AREA.

AREA: What AREA activities do you expect to participate in?

FARHAD PATEL: The Research Committee is of great value and we try to participate in AREA programs and activities as much as possible.

CES 2018 also revealed some of the gaps that need to be filled for the AR movement to accelerate. First, “the world is seriously devoid of AR talent,” as Jim Heppelmann noted. Secondly, the nature of spatially-based visuals requires complex, high-resolution objects to be delivered to the user. These are generally too large and dynamic to be contained within static apps on a local client and thus need to be web streamed live. The developer community needs to establish protocols for real-time AR asset streams as it has done for web VR in the past.

CES 2018 also revealed some of the gaps that need to be filled for the AR movement to accelerate. First, “the world is seriously devoid of AR talent,” as Jim Heppelmann noted. Secondly, the nature of spatially-based visuals requires complex, high-resolution objects to be delivered to the user. These are generally too large and dynamic to be contained within static apps on a local client and thus need to be web streamed live. The developer community needs to establish protocols for real-time AR asset streams as it has done for web VR in the past.