ORAU awards 35 research grants totalling $175,000 to junior faculty at its member universities; GDIT and the AREA fund single grants in new specialty areas

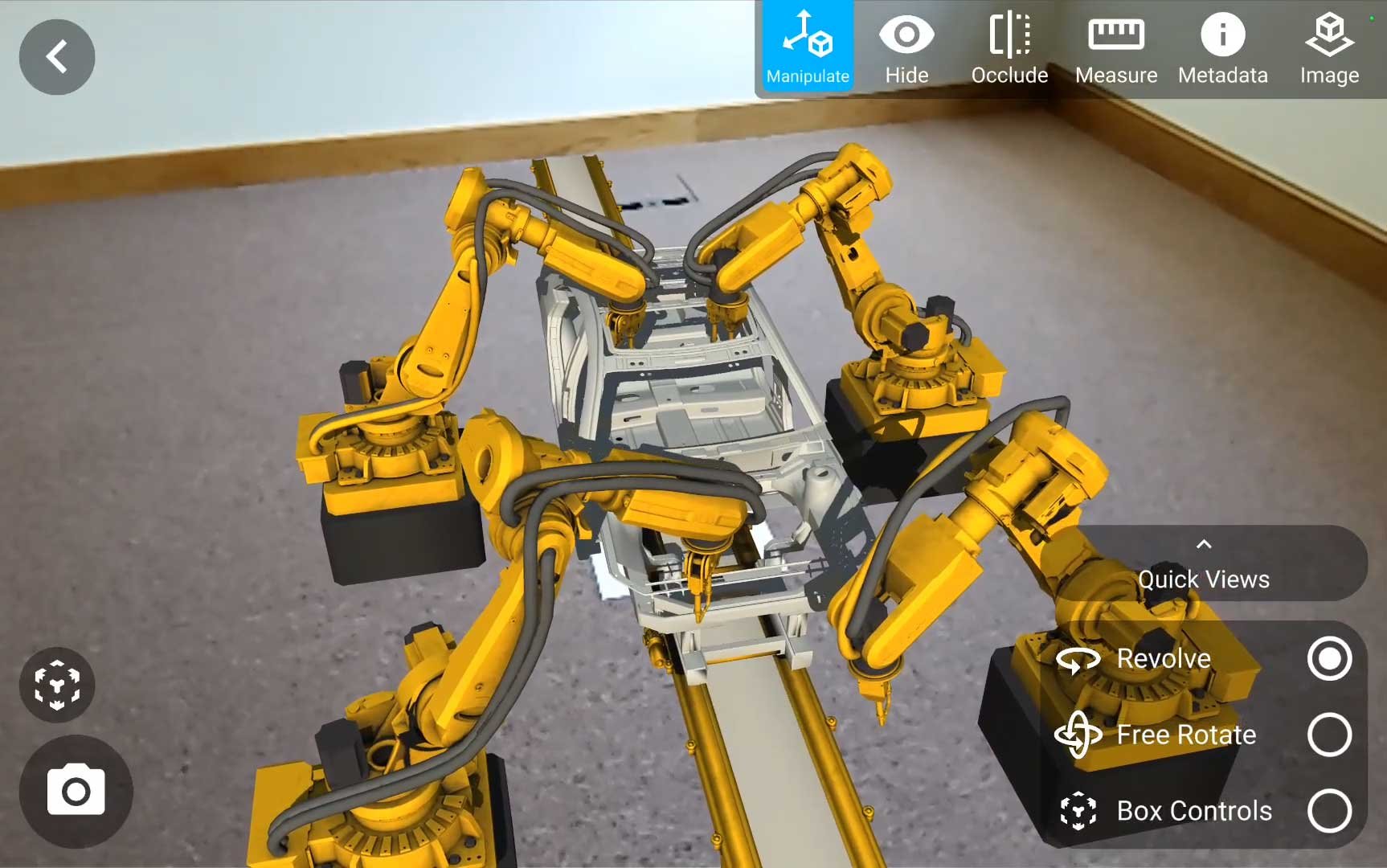

The awards recognize faculty members for their work in any of five science and technology disciplines: engineering and applied science; life sciences; mathematics and computer science; physical sciences; and policy, management or education. GDIT’s award funds research in supply chain innovation while The AREA’s award focuses on augmented reality in the workplace.

“Each year, ORAU supports the research and professional development of emerging leaders at the universities who are members of our consortium,” said Ken Tobin, ORAU chief research and university partnerships officer. “The Powe Award program is always extremely popular and very competitive. We are grateful to join with GDIT and The AREA in expanding the research focus of these awards.”

“The AREA is excited about supporting faculty research in higher education to support the use of AR in the enterprise,” said Mark Sage, AREA executive director. “Our mission is to further the adoption of interoperable AR-enabled enterprise systems.”

Alex McGuire, GDIT’s vice president and supply chain officer, added, “As a supply chain innovator, we’re honored to support ORAU grant recipients and their research to advance and apply next-generation science and technology.”

The Powe recipients, each of whom is in the first two years of a tenure track position, will receive $5,000 in seed money for the 2024-25 academic year to enhance their research during the early stages of their careers. Each recipient’s institution matches the Powe award with an additional $5,000, making the total prize worth $10,000 for each winner. Winners may use the grants to purchase equipment, continue research or travel to professional meetings and conferences.

Since the program’s inception, ORAU has awarded 910 grants totaling more than $4.55 million. Including the matching funds from member institutions, ORAU has facilitated grants worth more than $9 million.

The awards, now in their 34th year, are named for Ralph E. Powe, who served as the ORAU councilor from Mississippi State University for 16 years. Powe participated in numerous committees and special projects during his tenure and was elected chair of ORAU’s Council of Sponsoring Institutions. He died in 1996.

Recipients of the Ralph E. Powe Junior Faculty Enhancement Awards for the 2024-2025 academic year are listed below:

| ORAU Award Recipient | Member Institution |

| Augusta University | Evan Goldstein |

| Catholic University of America | Dominick Rizk [GDIT Award] |

| Duke University | Di Fang |

| Fayetteville State University | Chandra Adhikari |

| Florida International University | Asa Bluck |

| Iowa State University | Esmat Farzana |

| Iowa State University | Qiang Zhong |

| Louisiana State University | Sviatoslav Baranets |

| Michigan Technological University | Tan Chen |

| Oakland University | Alycen Wiacek [The AREA Award] |

| Ohio State University | Zhihui Zhu |

| Penn State University | Tao Zhou |

| Purdue University | Justin Andrews |

| Tulane University | Daniel Howsmon |

| University of Alabama at Birmingham | Rachel June Smith |

| University of Alabama in Huntsville | Agnieszka Truszkowska |

| University of Arizona | Kenry |

| University of Arizona | Shang Song |

| University of Colorado Denver | Stephanie Gilley |

| University of Colorado Denver | Linyue Gao |

| University of Delaware | Yan Yang |

| University of Florida | Angelika Neitzel |

| University of Houston | Ming Zhong |

| University of Memphis | Yuan Gao |

| University of Mississippi | Yi Hua |

| University of New Mexico | Madura Pathirage |

| University of North Carolina at Charlotte | Lin Ma |

| University of North Texas | Linlang He |

| University of Oklahoma | Kasun Kalhara Gunasooriya |

| University of Texas at El Paso | Eda Koculi |

| University of Utah | Qilei Zhu |

| University of Wisconsin-Madison | Whitney Loo |

| Vanderbilt University | Alexander Schuppe |

| Vanderbilt University | Lin Meng |

| Virginia Tech | Jingqiu Liao |

| Washington University in St. Louis | Xi Wang |

| Yale University | Huaijin Ken Leon Loh |

For more information on ORAU member grant programs, visit https://orau.org/partnerships/grant-programs/index.html.

ORAU provides innovative scientific and technical solutions to advance national priorities in science, education, security and health. Through specialized teams of experts, unique laboratory capabilities and access to a consortium of more than 150 colleges and universities, ORAU works with federal, state, local and commercial customers to advance national priorities and serve the public interest. A 501(c)(3) nonprofit corporation and federal contractor, ORAU manages the Oak Ridge Institute for Science and Education for the U.S. Department of Energy. Learn more about ORAU at www.orau.org.

Like us on Facebook: https://www.facebook.com/OakRidgeAssociatedUniversities

Follow us on X (formerly Twitter): https://twitter.com/orau

Follow us on LinkedIn: https://www.linkedin.com/company/orau

Follow us on Instagram: https://www.instagram.com/orautogether/?hl=en

About the AR for Enterprise Alliance (AREA)

The AR for Enterprise Alliance (AREA) is the only global membership-funded alliance helping to accelerate the adoption of enterprise AR by supporting the growth of a comprehensive ecosystem. The AREA accelerates AR adoption by creating a comprehensive ecosystem for enterprises, providers, and research institutions. AREA is a program of Object Management Group® (OMG®). For more information, visit the AREA website. Object Management Group and OMG are registered trademarks of the Object Management Group. For a listing of all OMG trademarks, visit https://www.omg.org/legal/tm_list.htm. All other trademarks are the property of their respective owners.