Blippar brings AR content creation and collaboration to Microsoft Teams

LONDON, UK – 14 June 2022 – Blippar, one of the leading technology and content platforms specializing in augmented reality (AR), has announced the integration of Blippbuilder, its no-code AR creation tool, into Microsoft Teams.

Blippbuilder, the company’s no-code AR platform, is the first of its type to combine drag and drop-based functionality with SLAM, allowing creators at any level to build realistic, immersive AR experiences. Absolute beginners can drop objects into a project, which when published will stay firmly in place using Blippar’s proprietary surface detection. These experiences will serve as the foundation of the interactive content that will make up the metaverse.

Blippbuilder includes access to tutorials and best practice guides to familiarise users with AR creation, taking them from concept to content. Experiences are built to be engaged with via browser – known as WebAR – removing the friction of, and reliance on dedicated apps or hardware. WebAR experiences can be accessed through a wide range of platforms, including Facebook, Snapchat, TikTok, WeChat, WhatsApp, alongside conventional web and mobile browsers.

Teams users can integrate Blippbuilder directly into their existing workflow. Designed with creators and collaborators in mind, whether they be product managers, designers, creative agencies, clients, or colleagues, organisations can be united in their approach and implementation – all within Teams. The functionality of adaptive cards, single sign-on, and notifications, alongside real-time feedback and approvals, provides immediate transparency and seamless integration from inception to distribution. The addition of tooltips, support features, and starter projects also allows teams to begin creating straightaway.

“The existing process for creating and publishing AR for businesses, agencies, and brands is splintered. Companies are forced to use multiple tools and services to support collaboration, feedback, reviews, updates, approvals, and finalization of projects,” said Faisal Galaria, CEO at Blippar. “By introducing Blippbuilder to Microsoft Teams, workstreams including team channels and group chats, we’re making it easier than ever before for people to collaborate, create and share amazing AR experiences with our partners at Teams”.

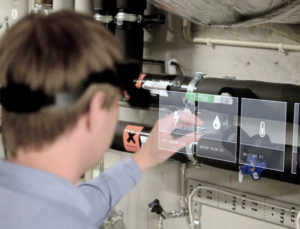

Utilizing the powerful storytelling and immersive capabilities of AR, everyday topics, objects, and content, from packaging, virtual products, adverts, and e-commerce, to clothing and artworks, can be ‘digitally activated’ and transformed into creative, engaging, and interactive three-dimensional opportunities.

Real-life examples include:

- Bring educational content to life, enabling collaborative, immersive learning

- Visualise and discuss architectural models and plans with clients

- Allowing product try-ons and 3D visualization in e-commerce stores

- Create immersive onboarding and training content

- Present and discuss interior design and event ideas

- Bring print media and product packaging to life

- Artists and illustrations can redefine the meaning of three-dimensional artworks

In today’s environment of increasingly sophisticated user experiences, customers are looking to move their technologies forward efficiently and collaboratively. Having access to a comprehensive AR creation platform is a feature that will keep Microsoft Teams users at the forefront of their industries. Blippbuilder in Teams is the type of solution that will help customers improve the quality and efficiency of their AR building process.

Blippar also offers a developer creation tool, its WebAR SDK. While Blippbuilder for Teams is designed to be an accessible and time-efficient entry point for millions of new users, following this validation of AR, organisations can progress to building experiences with Blippar’s SDK. The enterprise platform boasts the most advanced implementation of SLAM and marker tracking, alongside integrations with the key 3D frameworks, including A-Frame, PlayCanvas, and Babylon.js.