Enterprise Augmented Reality (AR) offers countless opportunities to companies looking to improve the efficiency and effectiveness of their business. Many enterprises are pursuing Digital Transformation initiatives that focus on delivering technology strategies that drive innovation in support of the overall business goals.

Read on as we discuss the topic of technology strategies and how they relate to embracing enterprise AR in this, our latest AREA editorial. We’ve also created a complimentary handy podcast (>12 mins) for you to listen to on the go.

Robust technology strategies include the following components:

- Executive overview of strategic objectives

This covers the question: “What are the overall business drivers and how can technology advance them?” Such drivers can be evolutionary goals (e.g., improving profitability of certain activities within the business or reducing operating costs) or more revolutionary, for example, opening new lines of business.

- Situational review

The technology strategy review should include a description of the current state of the business, what technologies are being used and how well they are working. The situational review should also offer commentary on the areas of the business (or potential new opportunities) that need to be improved or offer the greatest potential. These can be specific financial objectives (e.g., “reduce costs and improve efficiency within the services business”) or may address more “soft” objectives, such as reducing staff churn and therefore expertise transfer and retention.

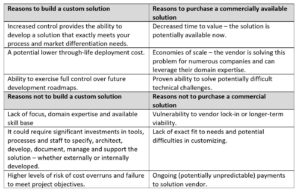

- Technology assessment and selection

As the strategy development continues, it quickly becomes important to assess which technologies can assist in supporting the business needs. At this phase, it’s important to take an outside-in view and gain perspectives on industry trends, perhaps hiring external experts or engaging with industry affiliations such as the AREA in order to determine the selection of the most appropriate technology.

The AREA can, for example, provide a neutral and independent view on the current technology state-of-the-art, its application to specific use cases and example case studies showing how the technology is being used within various industrial sectors.

- Strategic planning, resourcing and leadership

Next comes the determination of the implementation plan of the technology strategy. This phase should clearly identify potential vendors, internal staffing requirements and, most importantly, the internal champions and leadership (stakeholders) necessary to ensure alignment and roll out the solutions.

It is often helpful in this section of the strategy definition to include a maturity model, providing an internal roadmap over time of what is typically a growing adoption and leverage of the technologies within the strategy.

- Deployment

Lastly, the strategy execution – i.e., the rollout – commences. This will often include staff training, systems integration, custom development and more. Many companies will also implement a governance model that ties key performance indicators back to the original goals defined in the strategy.

This framework is typically used to support significant technology overhauls or new implementations, but what does this mean in relationship to adopting enterprise AR technologies?

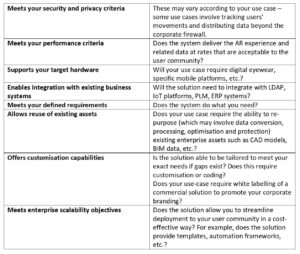

Depending upon how and where AR is to be used, one or more of the following considerations will arise:

- Process impacts

Often, the adoption of AR will involve changing how certain business processes are performed. This will involve IT impacts (new IT infrastructure to manage the process) and human impacts – how the “new way of working” is rolled out to the organization.

- New hardware implications

AR may involve the usage of new hardware technologies (e.g., digital eyewear, wearables) and therefore the IT organization must be involved in actively supporting the needs of this hardware, which, initially, may apply only to a select and small proportion of the workforce.

- The “content creation to consumption” pipeline

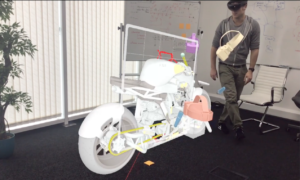

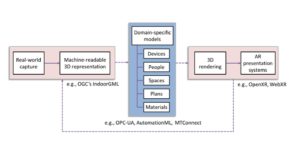

Many AR solutions require the development of new content or may incorporate reuse of existing digital assets. These may include procedural definitions (step-by-step instructions), 3D models (ideally derived from the CAD master models) and more. This data pipeline needs careful planning and architecting to ensure enterprise needs of scalability and cost-control are met.

- Data and systems integration

Some AR solution deployments harness AR’s unique ability to place digital content directly into the visual context of a user performing a task. As this is a unique selling point of AR, it is important to consider the architectural needs to ensure that data from enterprise business systems, such as PLM, SLM, ERP and IoT data streams, may be presented within the AR application. Ideally, the AR technology should incorporate mechanisms to complement existing technology platforms and tools by ensuring communication and display of information from these systems.

- Pace of change

As with any new technology domain, the pace of change can be dramatic. A robust technology strategy should be flexible in its definition in order to adapt to later developments or to offerings from new vendors, rather than be locked into a potentially obsolete technology or insolvent vendor.

- Human factors, safety and security

AR solutions exhibit other factors that should be incorporated into a robust technology strategy, including safety aspects (users are now watching a screen rather than their surroundings and may lose situational awareness), and security (AR devices may be delivering high-value intellectual property that must be secure against malicious acts), amongst others.

Some of these challenges may be familiar to IT executives, while others may be new.

With these points in mind, and from the perspective of determining, planning and implementing a technology strategy, what does this mean to companies wishing to embrace enterprise AR?

Given the nature of the earlier points, and the depths of integration that may be required, one might think that AR needs to be considered only as part of a ground-up technology strategy definition. However, as with many technologies, integration and planning can happen at a later stage.

Mike Campbell, Executive VP, Augmented Reality Products at PTC, comments “Augmented Reality may be new, and its impact may be disruptive, but that doesn’t mean it can’t be woven seamlessly into your existing strategies. AR can plug into and enhance your existing technology stack, improving productivity and communications, helping to modernize training, and ultimately driving more contextual insights for employees.”

Mike makes an important point. Given that AR offers new “windows” into existing data and systems and provides new process methods, it remains important for many businesses that any disruption is a positive one for their business and not a negative one for their existing IT systems infrastructure. Meshing with existing infrastructure is key to enterprise adoption.

Mike Campbell continues: “Leaders in the AR industry work hard to make software and hardware scalable and simple for enterprise implementation. It can be integrated into a technology strategy to enhance the solutions you already have to offer in an efficient and engaging way to visualize information. You can leverage your existing CAD models or IoT data and extend their reach through AR, creating a strong digital thread in your organization and helping your employees access critical digital data in the context of the physical world where they’re doing their work.”

Given the fast pace of change in emerging technologies such as AR, businesses typically prefer not to be locked into the technological minutiae of specific vendors and clearly wish to leverage the investment in applications across multiple domains of their business, where it makes sense to do so.

Mike Campbell puts it this way: “Choosing a cross-platform AR technology that partners with powerful hardware, whether headsets or tablets, can give you more flexibility in how you want to deploy this information across your workforce, enabling you to provide solutions for employees in the field, on the factory floor, and even in the back office.”

AR can be considered a strategic technology initiative in its own right but the real power of AR is unleashed when it complements and supports other technology and business strategies. A common place for AR to really shine is at the intersection of Product Lifecycle Management (PLM), the Internet of Things (IoT) and, often, Service Lifecycle Management solutions.

AR is often used as an industrial sales and marketing tool, which typically requires a thin veneer of enterprise systems strategic alignment. However, the greatest value of enterprise AR comes when it is integrated with other technology strategies to be part of a larger and holistic strategic technology arsenal to transform specific business areas.

Commenting on this, Mike Campbell opines: “How exactly you choose to deploy AR will depend on your business needs. If you have existing CAD models, you can build these into AR experiences to offer immersive training, maintenance, or assembly instructions that overlay these models on top of the physical machines with which they correspond.

This can drastically improve your workforce productivity and shorten the time it takes to train someone by offering in-context information where and when it’s needed. If you have IoT data, enabling employees to visualize this data in AR can provide real-time insights into the machines they’re working on, letting them quickly and easily identify problems while on the shop floor.”

In summary, considering how technology strategies are often defined, AR can be treated as revolutionary or evolutionary, enabling businesses to try, assess, learn and expand without disrupting existing IT infrastructure.

We’ll conclude with one final thought from Mike Campbell: “The question really isn’t ‘how does AR fit into a company’s technology strategy’, but how do you want it to fit. There are countless ways AR can bring value to your business, and AR software and hardware providers are continually improving their technology to make integration powerful and simple.”

That is exactly what we’re supporting at the AREA. We’re helping a growing community of users and vendors of AR to share knowledge and tools along with developing expertise and best practices to ensure that AR adoption continues to grow in 2020 and beyond.

Within the AREA, we have several active committees that are committed to developing and driving best practices. To find out more, please visit thearea.org.